Improving transparency in AI by exploring new avenues for human feedback, robustness, and documentation

Before starting my Mozilla Trustworthy AI fellowship, I spent 3 years leading AI impact assessment projects, as a core part of the Responsible AI team at a multinational consulting company. My biggest challenge was to better understand AI systems failure modes from the perspective of their real-world users’ experiences of algorithmic harm and bias on the individual and collective levels. I was very frustrated with the obstacles to creating avenues for consumers to participate in the audit and impact assessment of AI systems.

Being part of Mozilla Foundation has propelled me in working on something that wasn’t in any way possible to explore within industry or academic institutions. In summary, that was because of the friction among a number of different stakeholders. However, that experience inspired me to think critically about friction and see it in a new light.

One of the first questions we need to ask is — what are the biggest challenges for improved transparency in AI?

The power and information asymmetries between people and consumer technology companies employing algorithmic systems are legitimized through contractual agreements which have failed to provide people with meaningful consent and recourse mechanisms. In a research paper, Deborah Raji et al. give a number of examples in the context of AI systems used for detecting unemployment benefit fraud, access to healthcare benefits, content moderation and others.

Oftentimes, when interacting with AI systems, such as when YouTube recommends a video we might like or when we see an advertisement in our social media stream, we don’t have a meaningful way to express disagreement with what we experience. You might say that people don’t care about contestability in the context of their interactions with AI, and you might be right. However, the 22 722 volunteers who participated in Mozilla’s RegretsReporter project, show that there are people eager to report controversies in their experience of interractitng with YouTube’s recommender system. Similarly, I argue that given appropriate tools and platform affordances, everyday consumers are a key stakeholder in the continuous evaluation and improvement of algorithmic systems.

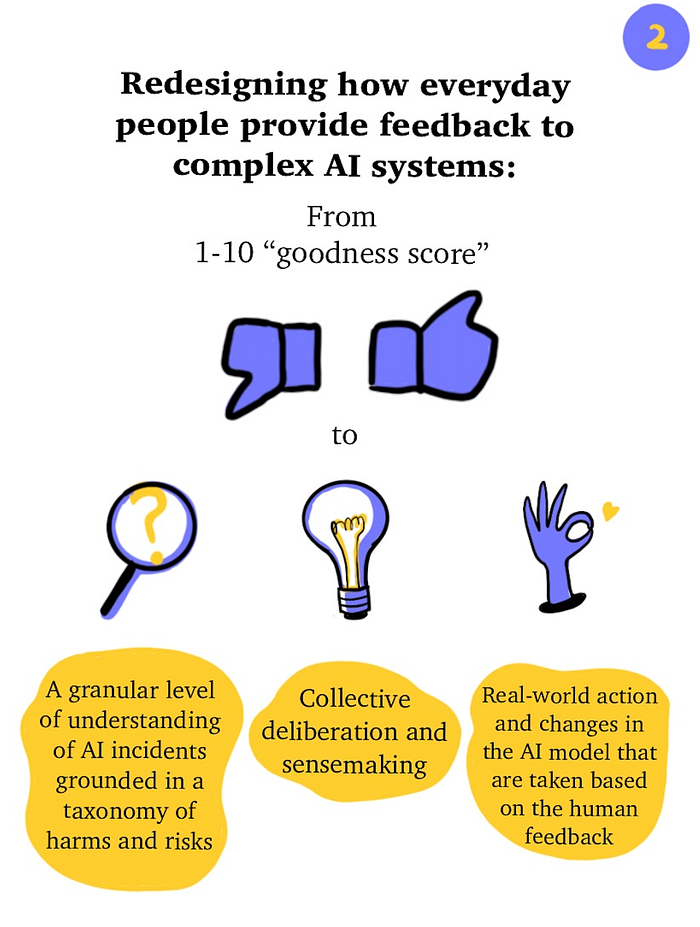

What if we could redesign how everyday people provide feedback to complex AI systems and empower:

- Formal specification of AI incidents or controversies grounded in a taxonomy of potential algorithmic harms and risks

- Collective deliberation and sensemaking about AI incidents or controversies

- Real-world action and changes in the target algorithmic systems that are taken up by ML Ops teams based on the human feedback

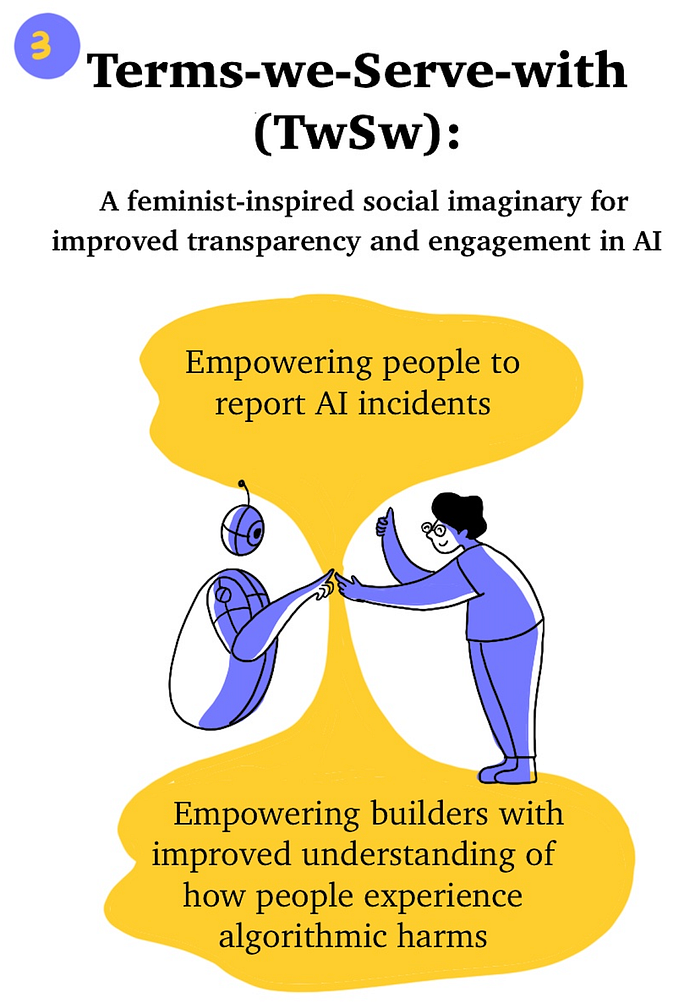

Together with collaborators Megan Ma and Renee Shelby, we explore this further in the context of a speculative provocation we frame as the Terms-we-Serve-with (TwSw) agreement — a new social, computational, and legal contract for restructuring power asymmetries and center-periphery dynamics in how everyday consumers interact with AI.

The TwSw is a relational living soico-technical artifact. It is not about a transaction — the fictional “I agree to the terms-of-service” — but about a design reorientation that brings improved understanding and transparency to the way the decisions an algorithmic system makes influence our everyday life.

In developing terms-we-serve-with agreements, we partner with:

(1) community-driven projects who seek to leverage AI in a way that is aligned with their mission and values

(2) AI builders who seek to enable improved robustness, transparency, and human oversight in understanding the downstream impacts of their technology

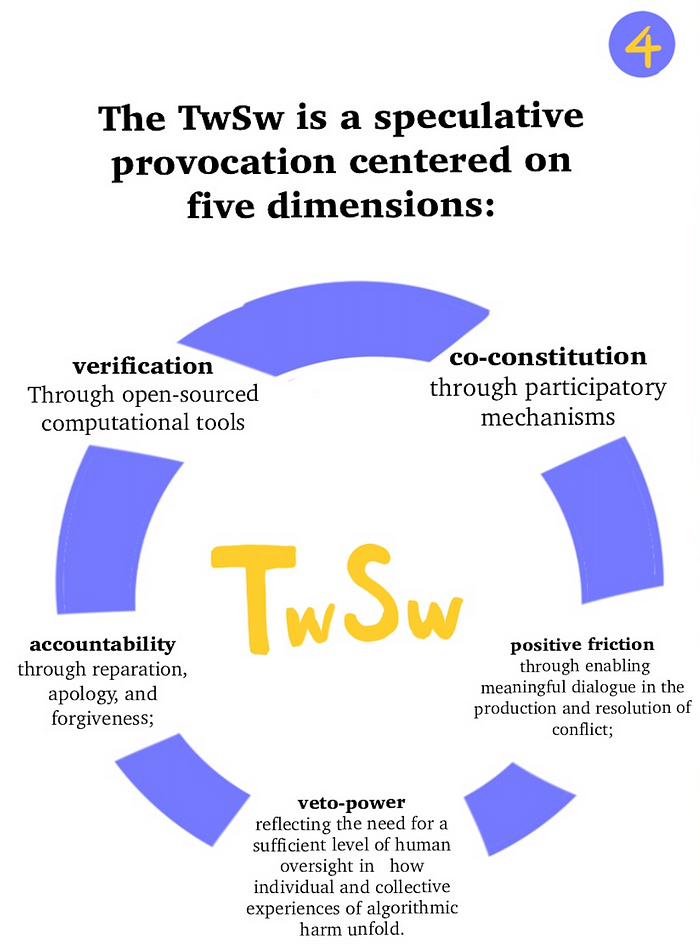

The technical component of the terms-we-serve-with is a reporting and documentation mechanism grounded in formal logic and a taxonomy of algorithmic harms and risks. We are developing a prototype leveraging logic programming in building a dataset of user perceived examples of harmful interactions between people and large language models. For example, consider representational harms such as social stereotyping or allocative harms such as opportunity or economic loss, or quality-of-service harms, and broader societal harms such as misinformation and the erosion of democracy. Learn more in a paper we presented at the Connected Life: Designing Digital Futures conference at the Oxford Internet Institute.

We hope that the terms-we-serve-with serves as provocation and an example for what innovation could look like for communities and businesses to restructure the power dynamics and information asymmetries in the context of individual and collective experiences of downstream impacts of AI. We imagine a future where:

- Consumers have a way to document, collect evidence, and make verifiable claims about their experience in interacting with AI

- AI builders have a neuro-symbolic formal logic layer around a ML model that gives them improved understanding about its downstream impacts

Does your community or AI project need a Terms-we-Serve-with? Share more through this form and we’ll follow up and include you in upcoming workshops and other activities we’re planning.

All illustrations above were created by Yan Li.